The entire field of artificial intelligence, in the last few years, is built upon deep learning or deep neural networks. Notably, Apple’s Siri, Google-DeepMinds’ AlphaGo, or the self-driving mechanism in Tesla cars are all based on deep learning. Here, my goal is to make deep learning neural networks much more accessible for everyone. In this series of articles, you will gain an intuitive understanding of the logic and mathematics behind deep learning. Moreover, you will also learn how to practice it by building a few models from scratch. In almost every book on the topic, deep learning is compared to the complicated biological neural networks in the human brain. I, however, think Super Mario Bros. can provide more intuitive yet friendly insights into deep learning than neurosurgeons.

Deep Learning and Plumbing

I live in an eighteen-floor high-rise building in Mumbai. With close to hundred flats in the edifice, one would assume the water pipelines to form quite a mesh. A few years ago we were having a problem of high water pressure in our bathroom. I asked our building plumber to check the pipelines and fixed the water pressure. He climbed up the duct to twiddle around with the numerous knobs available to manage the flow of water. I stayed in the bathroom and opened the tap to monitor the water flow.

The plumber was using this ingenious method to fix the problem called “hit or miss”. He rotated a few knobs and shouted: “is the water pressure reducing?”. I, in response, shouted back “no it’s the same.” This process went on for a while. After some time, he gave up. In this process, without fixing our problem he must have screwed up the water pressure for at least a few of our neighbors as well. Since then we have decided to treat our bath shower and taps as acupressure devices.

This, in reality, was a job for Super Mario Bros – the beloved plumbers from the famous Nintendo video game from the eighties. Let’s see, how they would have solved the problem using the deep learning methods.

Water Pipelines and Deep Learning Neural Networks

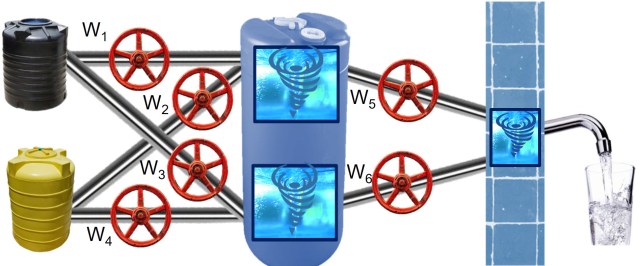

Let’s create a much simpler layout of pipelines to understand the problem of water pressure in my bathroom. In the process, we will learn how deep learning works. Imagine there are two water tanks through which the water is supplied in the building. These tanks are connected by pipelines with an additional storage space with multiple chambers in the middle. I will come back to these middle chambers aka the hidden nodes and layers in the neural networks. Note, these hidden layers have the special non-linear properties. I have displayed this non-linear behavior like the turbulence or whirl in these hidden and output chambers (nodes).

The flow of water or water pressure from the tab is managed by the six circular red knobs. Now, essentially, the entire problem of management of water pressure is restricted to the optimal settings of these six knobs. Here, the yellow and black input tanks towards the left are the input variables. The water coming out of the tap towards the right is the output variable.

For example, input variables could be education and years of experience of a person. The output tap here is the salary of the person. Now, if we are aware of the right settings for the six knobs we will get the right output (estimation) for salary with the input values. Before we learn the logic and mathematics to identify the right settings for the red knobs, let’s go back to the non-linearity of hidden and output layers.

Quick NoteDeep neural networks used for deep learning have more than one hidden layer. The overall logic and mathematics don’t change much with the addition of layers. Deep learning is extremely useful for machines to derive meaning from alternate data sources like images, videos, language, and text. To decipher these alternate data, additional layers and components are added to the network. These additional layers are no different than additional water filters and pressure boosters/regulators in our water pipeline example. These networks with additional components are referred with fancy names like convolutional neural networks (CNN), and recurrent neural networks (RNN). |

Non-Linear Hidden Layers & Sprinklers

Let’s try to understand non-linearity through the garden sprinkler in the adjacent image. The idea with the sprinkler is to provide water to each and every part of the lawn. Now, if you linearly throw water in one direction then you will end up making a large water puddle in one corner while the rest of the lawn is still dry. An ingenious yet simple solution to this problem is to add a lever near the edge of the sprinkler. This lever will oscillate because of the flow of the water and make the water spread non-linearly across the lawn.

Incidentally, non-linearity turned out to be quite a powerful tool for highly accurate estimations and predictions. Every physicist will agree that non-linearity is nature’s best-kept secret to create this beautiful universe. All fluids are capable of displaying non-linear behavior. You would agree if you have ever experienced mid-air turbulence while flying.

Neural networks try to infuse non-linearity by adding similar sprinkler-like levers in the hidden layers. This often results in an identification of better relationships between input variables (for example education) and output (salary). It kind of makes sense since if you stay in school for eternity it won’t improve your earnings infinitely. These levers produce the whirl or turbulence in the hidden and output nodes of the neural networks. These levers and resulting non-linearity are responsible for the high accuracy neural networks display for predictions. The linear models just couldn’t unfathomed the meaning within the complicated images or textual datasets.

Levers and Activation Function for Non-Linearity

How do neural networks make the data follow the sprinkler-like behavior? They use non-linear activation functions in the hidden and output nodes. These activation functions, like levers, come in many shapes and sizes. I will, however, just talk about the most popular activation functions. The most simple activation function is the binary step function. This is like an on/off electric switch. It is off (zero) for x less than 0, and on (one) for the values of x greater than or equal to 0.

The classic lever of activation functions is sigmoid or logit function. Instead of on/off switch it behaves like the regulator of fan or light. It smoothly moves from off (0) to on(1). We will use the sigmoid function when we will do our maths for neural networks in the next part of this series.

Finally, there are two other useful variants of activation function called ReLU and TanH. As you would notice the former is similar to the step function and the latter to the sigmoid function.

Backpropagation to Find the Optimal Settings of Water Knobs

Now, since you know all the important components of the neural networks pipeline, let go back to the main objective of finding the optimal settings for the water knobs. Once you know the optimal setting of the knobs for your data, you have essentially developed the deep learning model.

Remember the way I was shouting back at my buildings plumber to tell him about the water pressure while he was working with the knobs in the duct. Neural networks use a similar mechanism to identify the optimal settings of knobs or weights for the network. This method is called backpropagation. Each time the neural network plumber changes the weights, an algorithm (similar to me) shouts back about the state of the output given the inputs.

Unlike my building’s plumber, the neural networks’ plumber, let’s call him Super Mario, learns from the shout back. Each shout back helps Super Mario identify better settings for the knobs. In this iterative manner, Mario identifies the optimal or the best setting for knobs to manage the right water pressure for your house without screwing up the water pressure for the neighbors. Sometimes, Mario uses additional knobs, or water filters or pressure regulators/boosters to find the best setting like the convolutional neural networks (CNNs) and recurrent neural networks (RNNs). Incidentally, CNNs are highly useful for machines to understand image datasets. Moreover, RNNs are equally useful for machines to derive meaning out of human languages and sequential data.

Sign-off Note

I think neural networks and deep learning are made additionally complicated by comparing them to the most sophisticated and advanced object in the known universe i.e. the human brain. The biological neural networks in the brain are developed over millions of years using the process called evolution. Whereas, artificial neural networks (ANN) and deep learning are just a few decades old. I think we can be better off treating ANN as a plumbing job.

In the next parts of this series, be prepared to learn the mathematics of neural networks – trust me it will be fun with Super Mario at our rescue. Moreover, after the theory, you will do some practicals with the plumbing job. Be prepared to get wet since no plumber is allowed to leave the building dry.

Its so surreal to see someone have so much clarity …I only see people talking theory ..this is AWESOME

Thanks, Aditya.

This is a great explanation. You are a wonderful teacher

Please more of these great articles. I like the way you convey ideas in a simple way that’s easy to understand. Thanks!

Excellent article to understand the inner meaning of neural networks in simplest manner.

Very Excellent and clear explanation! You are doing wonderful!

This is Really Awesome – More I read more I fall in love . Such a wonderful insights. I think we should include these insights in our academic course. This will make our coming generation to be more imaginative and they will enjoy the beauty of math.

this explanation aligns with richard feynman way of teaching , kudos!

Love these metaphors you invent! They make everything so much easier to understand and remember. Thanks!

You should start and sell a course. You are a great teacher.

Woww..what a clear and easy explanation… Could’t stop my self to keep reading. Thank you so much

Again

Special thanks bro

clear and easy explanation

Thank you for this wonderful explanation

This was a great analogy comparing some of the core features of DL.

I know this might sound silly, but if you could add a graphical image for the explanation in “Water Pipelines and DL” section, it would help dummies like me visualize this rendition better.

Thanks.

Have one such representation in the next part. See if it helps : http://ucanalytics.com/blogs/math-of-deep-learning-neural-networks-simplified-part-2/

Thanks and keep up the good work.. World needs best ones like you.